New method enables more realistic hair simulation

When a person has a bad hair day, that's unfortunate. When a virtual character has bad hair, an entire animation video or film can look unrealistic. A new innovative method developed by Disney Research makes it possible to realistically simulate hair by observing real hair in motion.

One advantage of this new method is that once these simulation parameters have been determined, it is possible to edit the animation so that a hairstyle responds realistically to changes in head motion, to whether the hair is wet, oily or contains styling products, and in response to external forces, such as wind.

"The core idea is very simple: we do an educated guess of the simulation parameters and then use input data to validate this hypothesis," said Thabo Beeler, senior research scientist and leader of Disney Research's Capture and Effects Group. "It's an approach that applies to virtually any hair simulation technique."

The Disney researchers will present their method at the European Association for Computer Graphics annual conference, Eurographics 2017, April 24-28 in Lyon, France.

"After the face, hairstyle is one of the most important features that define an animated character, so modeling and animating hair is an area of intense research interest," noted Markus Gross, vice president at Disney Research. "Simulating tens of thousands of hair strands is difficult, so this work to improve the capture of real hair motion from video is a major step forward."

Animating hair by hand is impractical, so physical simulation of hair is the method of choice for the animated film and computer game industry. Still, setting the physical parameters for every fiber is impractical, Beeler said, and creating a digital model to simulate even a single hair style requires a lot of trial and error.

To obtain specific hairstyles, recent research has focused on using cameras to capture the behavior of real hair, in much the same way as facial performance capture obtains facial expressions from an actor to create a virtual character. Some methods capture not only static hairstyles from images, but can be used to reconstruct the motion of the hair. But, Beeler noted, these methods can only play back and re-render the hair from different points of view; the animation can't be edited to account for different external forces or head motions.

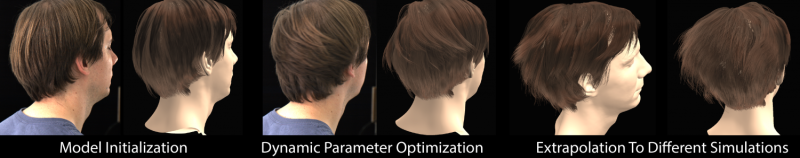

The framework devised by the Disney researchers is the first method for estimating hair parameters that considers the dynamics of hair and to fit the parameters to captured hair in motion. These parameters can be used to re-simulate the captured hair and also to edit the animation by changing head motion, the physical properties of the hair, such as wetness, and external forces.

This approach can be used with a wide variety of simulation methods, as the researchers demonstrated by using it in conjunction with two state-of-the-art simulation models. The same framework, they said could be used to estimate parameters for even more sophisticated simulation models as they are developed in the future.

More information: "Simulation-Ready Hair Capture" [PDF, 10.87 MB]

Provided by Disney Research