Kinect for Windows helps decode the role of hand gestures during conversations

We all know that human communication involves more than speaking—think of how much an angry glare or an acquiescent nod says. But apart from those obvious communications via body language, we also use our hands extensively while talking. While ubiquitous, our conversational hand gestures are often difficult to analyze; it's hard to know whether and how these spontaneous, speech-accompanying hand movements shape communication processes and outcomes. Behavioral scientists want to understand the role of these nonverbal communication behaviors. So, too, do technology creators, who are eager to build tools that help people exchange and understand messages more smoothly.

To decipher what our hands are doing when we talk to others, researchers need to obtain traces of hand movements during the conversation and be able to analyze the traces in a reliable yet cost-efficient way. Professor Hao-Chuan Wang and his team at National Tsing Hua University in Taiwan realized that they could solve this problem by using a Kinect for Windows sensor to capture and record both the hand gestures and spoken words of a person-to-person conversation.

"We thought to use Kinect because it's one of the most popular and available motion sensors in the market. The popularity of Kinect can increase the potential impact of the proposed method," Wang explains. "It will be easy for other researchers to apply our method or replicate our study. It's also possible to run large-scale behavioral studies in the field, as we can collect behavioral data of users remotely as long as they are Kinect users. Kinect's software development kit is also … easy to work with."

With the advantages of Kinect for Windows in mind, Wang collaborated with Microsoft Research Asia to use Kinect for Windows to capture hand movements during conversation. "I knew that Microsoft Research was conducting some advanced research with Kinect," he notes, "so we were very interested in working with Microsoft researchers to deliver more good stuff to the research community and society."

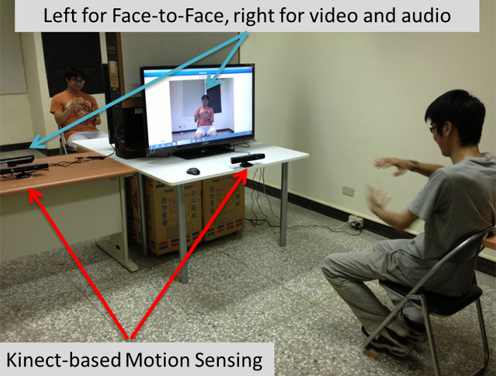

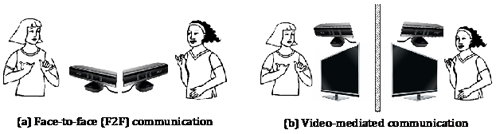

During the resulting collaborative research, the team placed two Kinect sensors back-to-back between two conversational participants to document the session. The sensors captured the speech and hand movements of each of the interlocutors simultaneously, providing a time-stamped recording of the spoken words and hand traces of the interacting individuals.

To demonstrate the utility of the approach, the researchers compared the amount and similarity of hand movements under three conditions: face-to-face conversation, video-mediated chat, and audio-mediated chat. The two participants could see each other during the face-to-face and video chat conversations, but they had no visibility of one another during the audio chat.

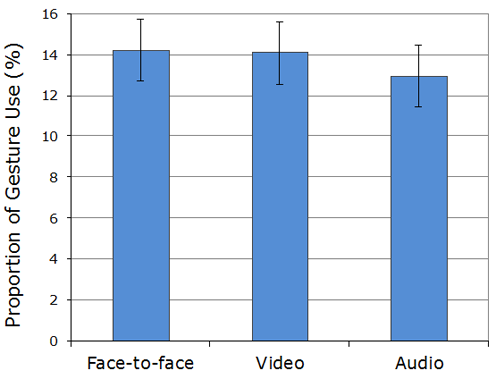

The researchers encountered some unexpected results. "First, perhaps counter-intuitively, we found that people actually gesture as much in an audio chat as in face-to-face and video chat. This suggests that people gesture probably not for others but for themselves, as it's clear that people are still moving their hands even when their partners cannot see it," Wang remarks.

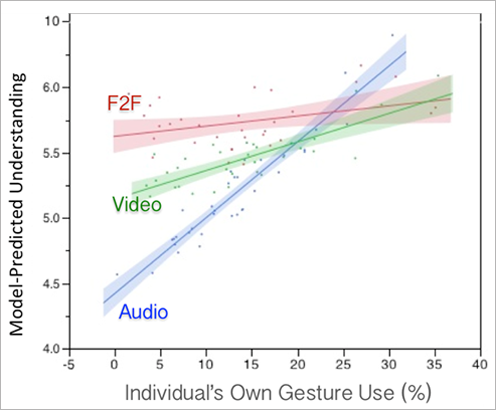

The investigators also looked at the relation between the conversation participants' comprehension of the whole communication and the amount of gestures used. Surprisingly, they didn't find a correlation between a participant's level of understanding and the amount of gestures their conversation partner used. Instead, they found an association between a participant's understanding of the conversation and that participant's own gesturing, strengthening the possibility that hand movements are not necessarily "body language" for producing and conveying messages, but rather self-reinforcers or signifiers related to the consumption of messages.

The potential applications of using Kinect sensors in this method are broad, covering theoretical and practical objectives. For example, it is possible to deploy studies in the field to examine the usability of particular communication tools (for example, whether Skype gets people to move their hands when they talk). In addition, researchers may be able to use the technique to accumulate experience-oriented design insights at a speed and scale unavailable previously.

"It's easy to set up and program Kinect, so it greatly reduces the overhead of applying it to cross-disciplinary research, where the goal is to spend time on studying and solving the domain problems rather than technical troubleshooting," Wang explains.

A full paper about Wang's collaboration project with Microsoft Research Asia was presented at CHI 2014, the ACM SIGCHI Conference on Human Factors in Computing Systems, which was held in Toronto, Canada, this April.

"I really enjoyed working with Microsoft Research Asia. I received both great support and freedom to pursue the topics of interest to me. This makes the collaboration really unique and valuable," Professor Wang says, "and I hope to closely collaborate with Microsoft researchers to scale up the current work. The proposed method has the potential to help us better understand communication behaviors in unconventional communication settings, such as cross-cultural and cross-linguistic communications, and in educational discourse, such as teacher-student interactions. Because language-based communication often doesn't go well in these situations, the non-verbal part may become more functional. Deeper understanding of the processes is likely to inform the design of technologies to better support these situations."

More information: Kinect-taped communication: using motion sensing to study gesture use and similarity in face-to-face and computer-mediated brainstorming. Hao-Chuan Wang, Chien-Tung Lai. CHI '14 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Pages 3205-3214, ACM New York, NY, USA (2014). dl.acm.org/citation.cfm?id=2557060

Source: Microsoft