February 4, 2013 feature

Human hearing beats the Fourier uncertainty principle

(Phys.org)—For the first time, physicists have found that humans can discriminate a sound's frequency (related to a note's pitch) and timing (whether a note comes before or after another note) more than 10 times better than the limit imposed by the Fourier uncertainty principle. Not surprisingly, some of the subjects with the best listening precision were musicians, but even non-musicians could exceed the uncertainty limit. The results rule out the majority of auditory processing brain algorithms that have been proposed, since only a few models can match this impressive human performance.

The researchers, Jacob Oppenheim and Marcelo Magnasco at Rockefeller University in New York, have published their study on the first direct test of the Fourier uncertainty principle in human hearing in a recent issue of Physical Review Letters.

The Fourier uncertainty principle states that a time-frequency tradeoff exists for sound signals, so that the shorter the duration of a sound, the larger the spread of different types of frequencies is required to represent the sound. Conversely, sounds with tight clusters of frequencies must have longer durations. The uncertainty principle limits the precision of the simultaneous measurement of the duration and frequency of a sound.

To investigate human hearing in this context, the researchers turned to psychophysics, an area of study that uses various techniques to reveal how physical stimuli affect human sensation. Using physics, these techniques can establish tight bounds on the performance of the senses.

An ear for precision

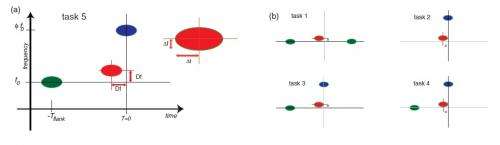

To test how precisely humans can simultaneously measure the duration and frequency of a sound, the researchers asked 12 subjects to perform a series of listening tasks leading up to a final task. In the final task, the subjects were asked to discriminate simultaneously whether a test note was higher or lower in frequency than a leading note that was played before it, and whether the test note appeared before or after a third note, which was discernible due to its much higher frequency.

When a subject correctly discriminated the frequency and timing of a note twice in a row, the difficulty level would increase so that both the difference in frequency between the notes and the time between the notes decreased. When a subject responded incorrectly, the variance would increase to make the task easier.

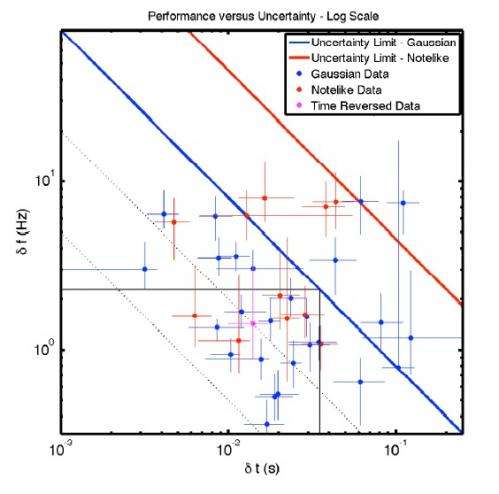

The researchers tested the subjects with two different types of sounds: Gaussian, characterized by a rise and fall that follows a bell curve shape; and note-like, characterized by a rapid rise and a slow exponential decay. According to the uncertainty principle, note-like sounds are more difficult to measure with high precision than Gaussian sounds.

But as it turns out, the subjects could discriminate both types of sounds with equally impressive performance. While some subjects excelled at discriminating frequency, most did much better at discriminating timing. The top score, achieved by a professional musician, violated the uncertainty principle by a factor of about 13, due to equally high precision in frequency acuity and timing acuity. The score with the top timing acuity (3 milliseconds) was achieved by an electronic musician who works in precision sound editing.

The researchers think that this superior human listening ability is partly due to the spiral structure and nonlinearities in the cochlea. Previously, scientists have proven that linear systems cannot exceed the time-frequency uncertainty limit. Although most nonlinear systems do not perform any better, any system that exceeds the uncertainty limit must be nonlinear. For this reason, the nonlinearities in the cochlea are likely integral to the precision of human auditory processing. Since researchers have known for a long time about the cochlea's nonlinearities, the current results are not quite as surprising as they would otherwise be.

"It is and it is not [surprising]," Magnasco told Phys.org. "We were surprised, yet we expected this to happen. The thing is, mathematically the possibility existed all along. There's a theorem that asserts uncertainty is only obeyed by linear operators (like the linear operators of quantum mechanics). Now there's five decades of careful documentation of just how nastily nonlinear the cochlea is, but it is not evident how any of the cochlea's nonlinearities contributes to enhancing time-frequency acuity. We now know our results imply that some of those nonlinearities have the purpose of sharpening acuity beyond the naïve linear limits.

"We were still extremely surprised by how well our subjects did, and particularly surprised by the fact that the biggest gains appear to have been, by and large, in timing. You see, physicists tend to think hearing is spectrum. But spectrum is time-independent, and hearing is about rapid transients. We were just told, by the data, that our brains care a great deal about timing."

New sound models

The results have implications for how we understand the way that the brain processes sound, a question that has interested scientists for a long time. In the early 1970s, scientists found hints that human hearing could violate the uncertainty principle, but the scientific understanding and technical capabilities were not advanced enough to enable a thorough investigation. As a result, most of today's sound analysis models are based on old theories that may now be revisited in order to capture the precision of human hearing.

"In seminars, I like demonstrating how much information is conveyed in sound by playing the sound from the scene in Casablanca where Ilsa pleads, "Play it once, Sam," Sam feigns ignorance, Ilsa insists," Magnasco said. "You can recognize the text being spoken, but you can also recognize the volume of the utterance, the emotional stance of both speakers, the identity of the speakers including the speaker's accent (Ingrid's faint Swedish, though her character is Norwegian, which I am told Norwegians can distinguish; Sam's AAVE [African American Vernacular English]), the distance to the speaker (Ilsa whispers but she's closer, Sam loudly feigns ignorance but he's in the back), the position of the speaker (in your house you know when someone's calling you from another room, in which room they are!), the orientation of the speaker (looking at you or away from you), an impression of the room (large, small, carpeted).

"The issue is that many fields, both basic and commercial, in sound analysis try to reconstruct only one of these, and for that they may use crude models of early hearing that transmit enough information for their purposes. But the problem is that when your analysis is a pipeline, whatever information is lost on a given stage can never be recovered later. So if you try to do very fancy analysis of, let's say, vocal inflections of a lyric soprano, you just cannot do it with cruder models."

By ruling out many of the simpler models of auditory processing, the new results may help guide researchers to identify the true mechanism that underlies human auditory hyperacuity. Understanding this mechanism could have wide-ranging applications in areas such as speech recognition; sound analysis and processing; and radar, sonar, and radio astronomy.

"You could use fancier methods in radar or sonar to try to analyze details beyond uncertainty, since you control the pinging waveform; in fact, bats do," Magnasco said.

Building on the current results, the researchers are now investigating how human hearing is more finely tuned toward natural sounds, and also studying the temporal factor in hearing.

"Such increases in performance cannot occur in general without some assumptions," Magnasco said. "For instance, if you're testing accuracy vs. resolution, you need to assume all signals are well separated. We have indications that the hearing system is highly attuned to the sounds you actually hear in nature, as opposed to abstract time-series; this comes under the rubric of 'ecological theories of perception' in which you try to understand the space of natural objects being analyzed in an ecologically relevant setting, and has been hugely successful in vision. Many sounds in nature are produced by an abrupt transfer of energy followed by slow, damped decay, and hence have broken time-reversal symmetry. We just tested that subjects do much better in discriminating timing and frequency in the forward version than in the time-reversed version (manuscript submitted). Therefore the nervous system uses specific information on the physics of sound production to extract information from the sensory stream.

"We are also studying with these same methods the notion of simultaneity of sounds. If we're listening to a flute-piano piece, we will have a distinct perception if the flute 'arrives late' into a phrase and lags the piano, even though flute and piano produce extended sounds, much longer than the accuracy with which we perceive their alignment. In general, for many sounds we have a clear idea of one single 'time' associated to the sound, many times, in our minds, having to do with what action we would take to generate the sound ourselves (strike, blow, etc)."

More information: Jacob N. Oppenheim and Marcelo O. Magnasco. "Human Time-Frequency Acuity Beats the Fourier Uncertainty Principle." PRL 110, 044301 (2013). DOI: 10.1103/PhysRevLett.110.044301

Journal information: Physical Review Letters

Copyright 2013 Phys.org

All rights reserved. This material may not be published, broadcast, rewritten or redistributed in whole or part without the express written permission of Phys.org.